Introduction: The “Embedded” Problem

When we talk about bias in AI, we often assume the problem is simply missing data—that we just need more diverse patients in the database. While representation is critical, a more insidious problem exists: Bias that is already embedded in the data itself.

Healthcare data is not a neutral record of biology; it is a reflection of a social system. It contains “hidden proxies”—patterns that invisibly disadvantage certain populations before an algorithm is even trained.

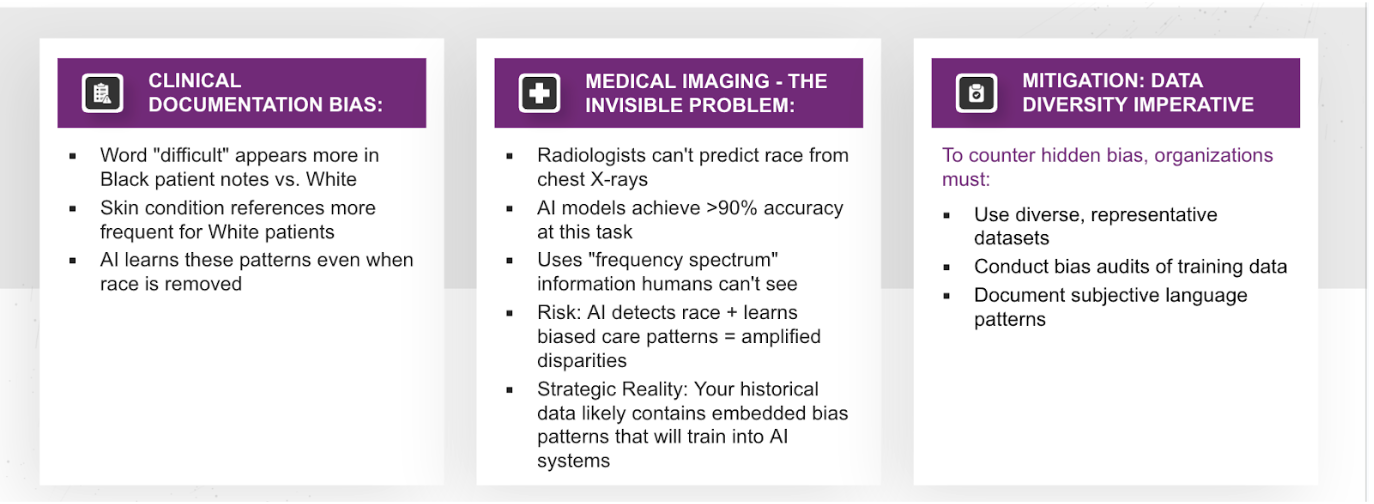

Hidden Proxies in Clinical Documentation

One of the most common sources of bias comes from Subjective Language. Natural Language Processing (NLP) models are often trained on decades of physician notes. However, research shows that the word “difficult” appears significantly more often in notes about Black patients compared to White patients.

This is not a medical observation; it is an unconscious bias reflecting systemic tension. If an AI model learns that “difficult” correlates with “lower adherence,” and “difficult” is a proxy for race, the AI will learn to downgrade care recommendations for Black patients without ever being explicitly told the patient’s race.

Similarly, Clinical Presentation Bias creates gaps in diagnosis. Reference materials for skin conditions have historically skewed heavily toward White patients, creating educational gaps in how dermatological issues are described in darker skin tones. An AI trained on this literature will struggle to diagnose skin cancer in Black or Hispanic patients, simply because the “textbook” language doesn’t match their clinical reality.

Invisible Bias in Medical Imaging

Perhaps most unsettling is what happens in medical imaging. We used to believe that medical images were “colorblind.” We were wrong.

Recent studies have shown that AI can predict a patient’s self-reported race from chest X-rays with an AUC greater than 0.9. This is a feat human radiologists cannot perform. The AI is picking up on “hidden signals” in the image frequency spectra that correlate with race.

Why is this dangerous? If an AI has “superhuman” recognition of race, and it is trained on historical data where minority patients received different standards of care, the model can combine these factors. It risks reinforcing unequal treatment pathways—automating the disparity while hiding it behind a “technical” image analysis.

The Data-Diversity Imperative

Mitigation requires more than just “more data.” It requires a fundamental shift in how we curate datasets:

- Representation Over Volume: A smaller, balanced dataset is often safer than a massive, skewed one.

- Explicit Bias Audits: We must scan training data for these subjective language patterns (like the usage of “difficult”) and strip or weight them appropriately.

- Documentation: We need to document not just what is in the dataset, but who collected it and under what clinical protocols.

Strategic Implications

Data-collection bias cascades through the entire pipeline. These hidden biases distort our standard metrics—sensitivity, specificity, calibration, and discrimination.

To fix this, organizations must audit legacy data and value representation over volume. If we do not detect these proxies during curation, no amount of algorithmic tuning will fix the safety issue downstream.