Introduction: The Pilot and the Algorithm

In this series, we have explored the technical fractures where bias enters the AI pipeline—from hidden proxies in data to the “Group Attribute Paradox” in algorithms. But fixing these individual fractures is not enough. To truly ensure equity, healthcare needs a fundamental shift in culture.

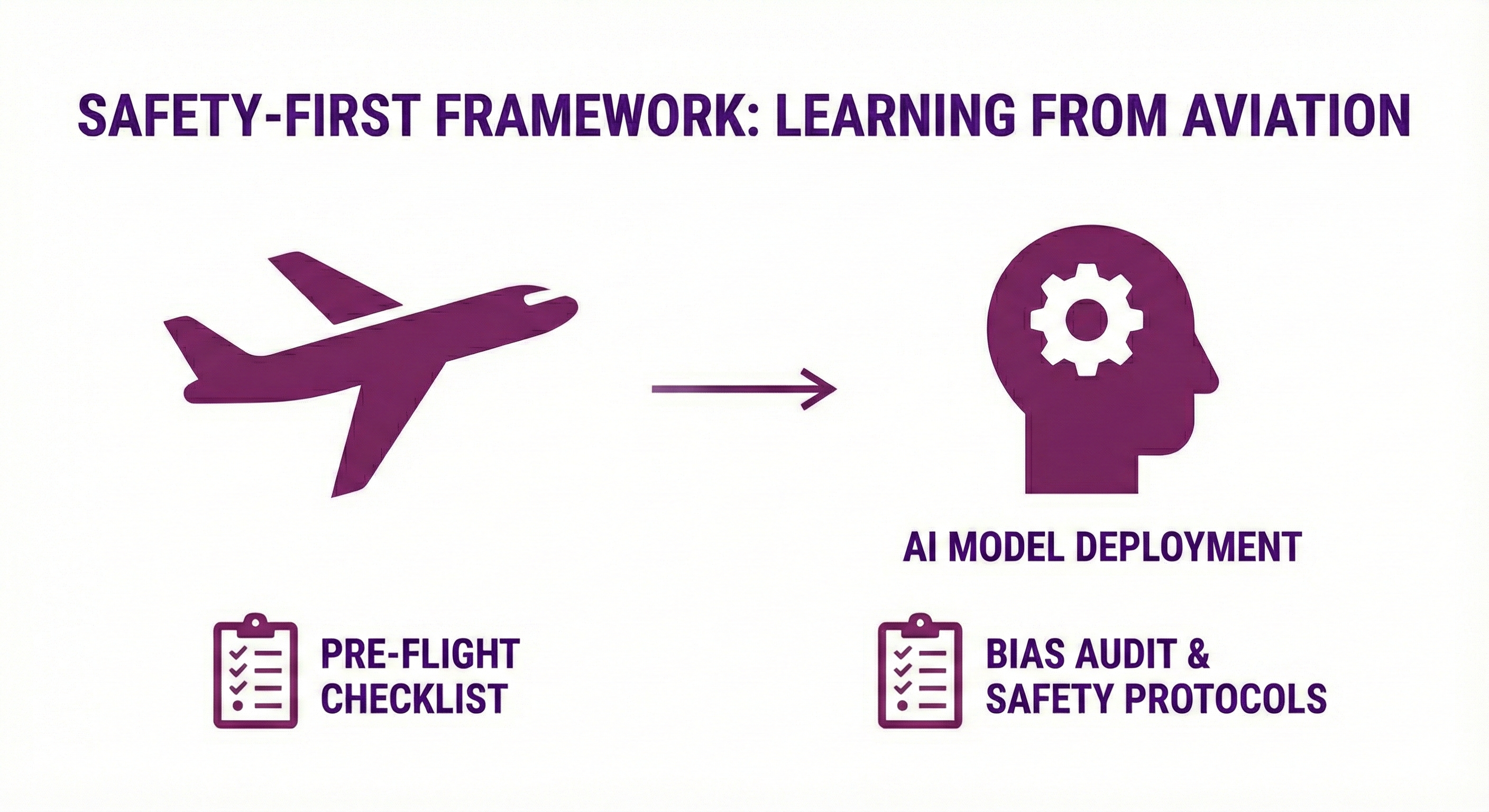

We do not need to invent this culture from scratch. We can look to an industry that has already mastered the balance between human judgment and automation: Aviation.

Learning from the Skies

Aviation’s safety culture offers a perfect model for AI governance. It didn’t achieve its safety record by simply building better engines; it did so by building better systems.

- Regulatory Infrastructure: Continuous feedback loops ensure that a mistake in one plane teaches a lesson to every plane.

- Safety-First Culture: The focus is on system improvement, not individual blame.

- Clear Liability Frameworks: Rules that encourage transparency rather than secrecy.

- Simulation Training: Pilots spend hundreds of hours in simulators to build “human-automation trust” before they ever fly passengers.

The Healthcare Parallel Imagine if we treated AI deployment like a flight. We wouldn’t just check if the “engine” (the algorithm) starts; we would simulate the workflow, stress-test the decision thresholds across different demographics (the weather conditions), and ensure the clinician (the pilot) knows exactly when to disengage the autopilot.

Systematic Implementation

To adopt this “Safety-First” framework in oncology, we must apply these principles across the entire lifecycle:

- Data Foundation: Building diverse datasets managed by inclusive teams to prevent “blind spots.”

- Comprehensive Evaluation: Moving beyond aggregate accuracy to analyze behavior across every demographic subgroup.

- Human-Centered Integration: Designing interfaces that support clinician autonomy rather than replacing it.

An Ongoing Process, Not a Destination

Ethical AI is not a box you check during software installation. It demands continuous vigilance. Just as aviation safety is never “finished,” AI safety requires continuous monitoring and adaptive integration strategies. It requires a culture of “system-wide learning” where finding a bias is treated as a successful safety catch, not a failure to be punished.

Strategic Imperative: The 4th Win

Bias in healthcare AI cannot be solved by algorithms alone. Organizations must embrace a safety-first, system-wide model that balances automation with accountability.

This post bridges the transition from the technical excellence we discussed in our earlier papers to the ethical resilience required for the future. It challenges healthcare organizations to move from building “performant models” to building “trustworthy systems.”

When technological innovation and clinical equity advance together, we create a true Win–Win–Win–Win: for patients, for clinicians, for the healthcare system, and for society itself.

Authored By: Padmasri Bhetanabhotla